December 02, 2020

Dustin Mitchell

Taskcluster's DB (Part 3) - Online Migrations

This is part 3 of a deep-dive into the implementation details of Taskcluster’s backend data stores. If you missed the first two, see part 1 and part 2 for the background, as we’ll jump right in here!

Big Data

A few of the tables holding data for Taskcluster contain a tens or hundreds of millions of lines. That’s not what the cool kids mean when they say “Big Data”, but it’s big enough that migrations take a long time. Most changes to Postgres tables take a full lock on that table, preventing other operations from occurring while the change takes place. The duration of the operation depends on lots of factors, not just of the data already in the table, but on the kind of other operations going on at the same time.

The usual approach is to schedule a system downtime to perform time-consuming database migrations, and that’s just what we did in July. By running it a clone of the production database, we determined that we could perform the migration completely in six hours. It turned out to take a lot longer than that. Partly, this was because we missed some things when we shut the system down, and left some concurrent operations running on the database. But by the time we realized that things were moving too slowly, we were near the end of our migration window and had to roll back. The time-consuming migration was version 20 - migrate queue_tasks, and it had been estimated to take about 4.5 hours.

When we rolled back, the DB was at version 19, but the code running the Taskcluster services corresponded to version 12. Happily, we had planned for this situation, and the redefined stored functions described in part 2 bridged the gap with no issues.

Patch-Fix

Our options were limited: scheduling another extended outage would have been difficult. We didn’t solve all of the mysteries of the poor performance, either, so we weren’t confident in our prediction of the time required.

The path we chose was to perform an “online migration”. I wrote a custom migration script to accomplish this. Let’s look at how that worked.

The goal of the migration was to rewrite the queue_task_entities table into a tasks table, with a few hundred million rows.

The idea with the online migration was to create an empty tasks table (a very quick operation), then rewrite the stored functions to write to tasks, while reading from both tables.

Then a background task can move rows from the queue_task_entitites table to the tasks table without blocking concurrent operations.

Once the old table is empty, it can be removed and the stored functions rewritten to address only the tasks table.

A few things made this easier than it might have been.

Taskcluster’s tasks have a deadline after which they become immutable, typically within one week of the task’s creation.

That means that the task mutation functions can change the task in-place in whichever table they find it in.

The background task only moves tasks with deadlines in the past.

This eliminates any concerns about data corruption if a row is migrated while it is being modified.

A look at the script linked above shows that there were some complicating factors, too – notably, two more tables to manage – but those factors didn’t change the structure of the migration.

With this in place, we ran the replacement migration script, creating the new tables and updating the stored functions. Then a one-off JS script drove migration of post-deadline tasks with a rough ETA calculation. We figured this script would run for about a week, but in fact it was done in just a few days. Finally, we cleaned up the temporary functions, leaving the DB in precisely the state that the original migration script would have generated.

Supported Online Migrations

After this experience, we knew we would run into future situations where a “regular” migration would be too slow. Apart from that, we want users to be able to deploy Taskcluster without scheduling downtimes: requiring downtimes will encourage users to stay at old versions, missing features and bugfixes and increasing our maintenance burden.

We devised a system to support online migrations in any migration.

Its structure is pretty simple: after each migration script is complete, the harness that handles migrations calls a _batch stored function repeatedly until it signals that it is complete.

This process can be interrupted and restarted as necessary.

The “cleanup” portion (dropping unnecessary tables or columns and updating stored functions) must be performed in a subsequent DB version.

The harness is careful to call the previous version’s online-migration function before it starts a version’s upgrade, to ensure it is complete. As with the old “quick” migrations, all of this is also supported in reverse to perform a downgrade.

The _batch functions are passed a state parameter that they can use as a bookmark.

For example, a migration of the tasks might store the last taskId that it migrated in its state.

Then each batch can begin with select .. where task_id > last_task_id, allowing Postgres to use the index to quickly find the next task to be migrated.

When the _batch function indicates that it processed zero rows, the handler calls an _is_completed function.

If this function returns false, then the whole process starts over with an empty state.

This is useful for tables where more rows that were skipped during the migration, such as tasks with deadlines in the future.

Testing

An experienced engineer is, at this point, boggling at the number of ways this could go wrong! There are lots of points at which a migration might fail or be interrupted, and the operators might then begin a downgrade. Perhaps that downgrade is then interrupted, and the migration re-started! A stressful moment like this is the last time anyone wants surprises, but these are precisely the circumstances that are easily forgotten in testing.

To address this, and to make such testing easier, we developed a test framework that defines a suite of tests for all manner of circumstances. In each case, it uses callbacks to verify proper functionality at every step of the way. It tests both the “happy path” of a successful migration and the “unhappy paths” involving failed migrations and downgrades.

In Practice

The impetus to actually implement support for online migrations came from some work that Alex Lopez has been doing to change the representation of worker pools in the queue.

This requires rewriting the tasks table to transform the provisioner_id and worker_type columns into a single, slash-separated task_queue_id column.

The pull request is still in progress as I write this, but already serves as a great practical example of an online migration (and online dowgrade, and tests).

Summary

As we’ve seen in this three-part series, Taskcluster’s data backend has undergone a radical transformation this year, from a relatively simple NoSQL service to a full Postgres database with sophisticated support for ongoing changes to the structure of that DB.

In some respects, Taskcluster is no different from countless other web services abstracting over a data-storage backend. Indeed, Django provides robust support for database migrations, as do many other application frameworks. One factor that sets Taskcluster apart is that it is a “shipped” product, with semantically-versioned releases which users can deploy on their own schedule. Unlike for a typical web application, we – the software engineers – are not “around” for the deployment process, aside from the Mozilla deployments. So, we must make sure that the migrations are well-tested and will work properly in a variety of circumstances.

We did all of this with minimal downtime and no data loss or corruption. This involved thousands of lines of new code written, tested, and reviewed; a new language (SQL) for most of us; and lots of close work with the Cloud Operations team to perform dry runs, evaluate performance, and debug issues. It couldn’t have happened without the hard work and close collaboration of the whole Taskcluster team. Thanks to the team, and thanks to you for reading this short series!

October 30, 2020

Dustin Mitchell

Taskcluster's DB (Part 2) - DB Migrations

This is part 2 of a deep-dive into the implementation details of Taskcluster’s backend data stores. Check out part 1 for the background, as we’ll jump right in here!

Azure in Postgres

As of the end of April, we had all of our data in a Postgres database, but the data was pretty ugly. For example, here’s a record of a worker as recorded by worker-manager:

partition_key | testing!2Fstatic-workers

row_key | cc!2Fdd~ee!2Fff

value | {

"state": "requested",

"RowKey": "cc!2Fdd~ee!2Fff",

"created": "2015-12-17T03:24:00.000Z",

"expires": "3020-12-17T03:24:00.000Z",

"capacity": 2,

"workerId": "ee/ff",

"providerId": "updated",

"lastChecked": "2017-12-17T03:24:00.000Z",

"workerGroup": "cc/dd",

"PartitionKey": "testing!2Fstatic-workers",

"lastModified": "2016-12-17T03:24:00.000Z",

"workerPoolId": "testing/static-workers",

"__buf0_providerData": "eyJzdGF0aWMiOiJ0ZXN0ZGF0YSJ9Cg==",

"__bufchunks_providerData": 1

}

version | 1

etag | 0f6e355c-0e7c-4fe5-85e3-e145ac4a4c6c

To reap the goodness of a relational database, that would be a “normal”[*] table: distinct columns, nice data types, and a lot less redundancy.

All access to this data is via some Azure-shaped stored functions, which are also not amenable to the kinds of flexible data access we need:

<tableName>_load- load a single row<tableName>_create- create a new row<tableName>_remove- remove a row<tableName>_modify- modify a row<tableName>_scan- return some or all rows in the table

[*] In the normal sense of the word – we did not attempt to apply database normalization.

Database Migrations

So the next step, which we dubbed “phase 2”, was to migrate this schema to one more appropriate to the structure of the data.

The typical approach is to use database migrations for this kind of work, and there are lots of tools for the purpose. For example, Alembic and Django both provide robust support for database migrations – but they are both in Python.

The only mature JS tool is knex, and after some analysis we determined that it both lacked features we needed and brought a lot of additional features that would complicate our usage. It is primarily a “query builder”, with basic support for migrations. Because we target Postgres directly, and because of how we use stored functions, a query builder is not useful. And the migration support in knex, while effective, does not support the more sophisticated approaches to avoiding downtime outlined below.

We elected to roll our own tool, allowing us to get exactly the behavior we wanted.

Migration Scripts

Taskcluster defines a sequence of numbered database versions. Each version corresponds to a specific database schema, which includes the structure of the database tables as well as stored functions. The YAML file for each version specifies a script to upgrade from the previous version, and a script to downgrade back to that version. For example, an upgrade script might add a new column to a table, with the corresponding downgrade dropping that column.

version: 29

migrationScript: |-

begin

alter table secrets add column last_used timestamptz;

end

downgradeScript: |-

begin

alter table secrets drop column last_used;

end

So far, this is a pretty normal approach to migrations. However, a major drawback is that it requires careful coordination around the timing of the migration and deployment of the corresponding code. Continuing the example of adding a new column, if the migration is deployed first, then the existing code may execute INSERT queries that omit the new column. If the new code is deployed first, then it will attempt to read a column that does not yet exist.

There are workarounds for these issues. In this example, adding a default value for the new column in the migration, or writing the queries such that they are robust to a missing column. Such queries are typically spread around the codebase, though, and it can be difficult to ensure (by testing, of course) that they all operate correctly.

In practice, most uses of database migrations are continuously-deployed applications – a single website or application server, where the developers of the application control the timing of deployments. That allows a great deal of control, and changes can be spread out over several migrations that occur in rapid succession.

Taskcluster is not continuously deployed – it is released in distinct versions which users can deploy on their own cadence. So we need a way to run migrations when upgrading to a new Taskcluster release, without breaking running services.

Stored Functions

Part 1 mentioned that all access to data is via stored functions. This is the critical point of abstraction that allows smooth migrations, because stored functions can be changed at runtime.

Each database version specifies definitions for stored functions, either introducing new functions or replacing the implementation of existing functions.

So the version: 29 YAML above might continue with

methods:

create_secret:

args: name text, value jsonb

returns: ''

body: |-

begin

insert

into secrets (name, value, last_used)

values (name, value, now());

end

get_secret:

args: name text

returns: record

body: |-

begin

update secrets

set last_used = now()

where secrets.name = get_secret.name;

return query

select name, value from secrets

where secrets.name = get_secret.name;

end

This redefines two existing functions to operate properly against the new table.

The functions are redefined in the same database transaction as the migrationScript above, meaning that any calls to create_secret or get_secret will immediately begin populating the new column.

A critical rule (enforced in code) is that the arguments and return type of a function cannot be changed.

To support new code that references the last_used value, we add a new function:

get_secret_with_last_used:

args: name text

returns: record

body: |-

begin

update secrets

set last_used = now()

where secrets.name = get_secret.name;

return query

select name, value, last_used from secrets

where secrets.name = get_secret.name;

end

Another critical rule is that DB migrations must be applied fully before the corresponding version of the JS code is deployed.

In this case, that means code that uses get_secret_with_last_used is deployed only after the function is defined.

All of this can be thoroughly tested in isolation from the rest of the Taskcluster code, both unit tests for the functions and integration tests for the upgrade and downgrade scripts. Unit tests for redefined functions should continue to pass, unchanged, providing an easy-to-verify compatibility check.

Phase 2 Migrations

The migrations from Azure-style tables to normal tables are, as you might guess, a lot more complex than this simple example. Among the issues we faced:

- Azure-entities uses a multi-field base64 encoding for many data-types, that must be decoded (such as

__buf0_providerData/__bufchunks_providerDatain the example above) - Partition and row keys are encoded using a custom variant of urlencoding that is remarkably difficult to implement in pl/pgsql

- Some columns (such as secret values) are encrypted.

- Postgres generates slightly different ISO8601 timestamps from JS’s

Date.toJSON()

We split the work of performing these migrations across the members of the Taskcluster team, supporting each other through the tricky bits, in a rather long but ultimately successful “Postgres Phase 2” sprint.

0042 - secrets phase 2

Let’s look at one of the simpler examples: the secrets service.

The migration script creates a new secrets table from the data in the secrets_entities table, using Postgres’s JSON function to unpack the value column into “normal” columns.

The database version YAML file carefully redefines the Azure-compatible DB functions to access the new secrets table.

This involves unpacking function arguments from their JSON formats, re-packing JSON blobs for return values, and even some light parsing of the condition string for the secrets_entities_scan function.

It then defines new stored functions for direct access to the normal table. These functions are typically similar, and more specific to the needs of the service. For example, the secrets service only modifies secrets in an “upsert” operation that replaces any existing secret of the same name.

Step By Step

To achieve an extra layer of confidence in our work, we landed all of the phase-2 PRs in two steps. The first step included migration and downgrade scripts and the redefined stored functions, as well as tests for those. But critically, this step did not modify the service using the table (the secrets service in this case). So the unit tests for that service use the redefined stored functions, acting as a kind of integration-test for their implementations. This also validates that the service will continue to run in production between the time the database migration is run and the time the new code is deployed. We landed this step on GitHub in such a way that reviewers could see a green check-mark on the step-1 commit.

In the second step, we added the new, purpose-specific stored functions and rewrote the service to use them. In services like secrets, this was a simple change, but some other services saw more substantial rewrites due to more complex requirements.

Deprecation

Naturally, we can’t continue to support old functions indefinitely: eventually they would be prohibitively complex or simply impossible to implement. Another deployment rule provides a critical escape from this trap: Taskcluster must be upgraded at most one major version at a time (e.g., 36.x to 37.x). That provides a limited window of development time during which we must maintain compatibility.

Defining that window is surprisingly tricky, but essentially it’s two major revisions. Like the software engineers we are, we packaged up that tricky computation in a script, and include the lifetimes in some generated documentation

What’s Next?

This post has hinted at some of the complexity of “phase 2”. There are lots of details omitted, of course!

But there’s one major detail that got us in a bit of trouble.

In fact, we were forced to roll back during a planned migration – not an engineer’s happiest moment.

The queue_tasks_entities and queue_artifacts_entities table were just too large to migrate in any reasonable amount of time.

Part 3 will describe what happened, how we fixed the issue, and what we’re doing to avoid having the same issue again.

October 28, 2020

Dustin Mitchell

Taskcluster's DB (Part 1) - Azure to Postgres

This is a deep-dive into some of the implementation details of Taskcluster. Taskcluster is a platform for building continuous integration, continuous deployment, and software-release processes. It’s an open source project that began life at Mozilla, supporting the Firefox build, test, and release systems.

The Taskcluster “services” are a collection of microservices that handle distinct tasks: the queue coordinates tasks; the worker-manager creates and manages workers to execute tasks; the auth service authenticates API requests; and so on.

Azure Storage Tables to Postgres

Until April 2020, Taskcluster stored its data in Azure Storage tables, a simple NoSQL-style service similar to AWS’s DynamoDB. Briefly, each Azure table is a list of JSON objects with a single primary key composed of a partition key and a row key. Lookups by primary key are fast and parallelize well, but scans of an entire table are extremely slow and subject to API rate limits. Taskcluster was carefully designed within these constraints, but that meant that some useful operations, such as listing tasks by their task queue ID, were simply not supported. Switching to a fully-relational datastore would enable such operations, while easing deployment of the system for organizations that do not use Azure.

Always Be Migratin’

In April, we migrated the existing deployments of Taskcluster (at that time all within Mozilla) to Postgres. This was a “forklift migration”, in the sense that we moved the data directly into Postgres with minimal modification. Each Azure Storage table was imported into a single Postgres table of the same name, with a fixed structure:

create table queue_tasks_entities(

partition_key text,

row_key text,

value jsonb not null,

version integer not null,

etag uuid default public.gen_random_uuid()

);

alter table queue_tasks_entities add primary key (partition_key, row_key);

The importer we used was specially tuned to accomplish this import in a reasonable amount of time (hours). For each known deployment, we scheduled a downtime to perform this migration, after extensive performance testing on development copies.

We considered options to support a downtime-free migration. For example, we could have built an adapter that would read from Postgres and Azure, but write to Postgres. This adapter could support production use of the service while a background process copied data from Azure to Postgres.

This option would have been very complex, especially in supporting some of the atomicity and ordering guarantees that the Taskcluster API relies on. Failures would likely lead to data corruption and a downtime much longer than the simpler, planned downtime. So, we opted for the simpler, planned migration. (we’ll revisit the idea of online migrations in part 3)

The database for Firefox CI occupied about 350GB. The other deployments, such as the community deployment, were much smaller.

Database Interface

All access to Azure Storage tables had been via the azure-entities library, with a limited and very regular interface (hence the _entities suffix on the Postgres table name).

We wrote an implementation of the same interface, but with a Postgres backend, in taskcluster-lib-entities.

The result was that none of the code in the Taskcluster microservices changed.

Not changing code is a great way to avoid introducing new bugs!

It also limited the complexity of this change: we only had to deeply understand the semantics of azure-entities, and not the details of how the queue service handles tasks.

Stored Functions

As the taskcluster-lib-entities README indicates, access to each table is via five stored database functions:

<tableName>_load- load a single row<tableName>_create- create a new row<tableName>_remove- remove a row<tableName>_modify- modify a row<tableName>_scan- return some or all rows in the table

Stored functions are functions defined in the database itself, that can be redefined within a transaction. Part 2 will get into why we made this choice.

Optimistic Concurrency

The modify function is an interesting case.

Azure Storage has no notion of a “transaction”, so the azure-entities library uses an optimistic-concurrency approach to implement atomic updates to rows.

This uses the etag column, which changes to a new value on every update, to detect and retry concurrent modifications.

While Postgres can do much better, we replicated this behavior in taskcluster-lib-entities, again to limit the changes made and avoid introducing new bugs.

A modification looks like this in Javascript:

await task.modify(task => {

if (task.status !== 'running') {

task.status = 'running';

task.started = now();

}

});

For those not familiar with JS notation, this is calling the modify method on a task, passing a modifier function which, given a task, modifies that task.

The modify method calls the modifier and tries to write the updated row to the database, conditioned on the etag still having the value it did when the task was loaded.

If the etag does not match, modify re-loads the row to get the new etag, and tries again until it succeeds.

The effect is that updates to the row occur one-at-a-time.

This approach is “optimistic” in the sense that it assumes no conflicts, and does extra work (retrying the modification) only in the unusual case that a conflict occurs.

What’s Next?

At this point, we had fork-lifted Azure tables into Postgres and no longer require an Azure account to run Taskcluster. However, we hadn’t yet seen any of the benefits of a relational database:

- data fields were still trapped in a JSON object (in fact, some kinds of data were hidden in base64-encoded blobs)

- each table still only had a single primary key, and queries by any other field would still be prohibitively slow

- joins between tables would also be prohibitively slow

Part 2 of this series of articles will describe how we addressed these issues. Then part 3 will get into the details of performing large-scale database migrations without downtime.

August 24, 2020

Pete Moore

ZX Spectrum +4 - kind of

The ZX Spectrum +2A was my first computer, and I really loved it. On it, I learned to program (badly), and learned how computers worked (or so I thought). I started writing my first computer programs in Sinclair BASIC, and tinkered a little with Z80 machine code (I didn’t have an assembler, but I did have a book that documented the opcodes and operand encodings for each of the Z80 assembly mnemonics).

Fast forward 25 years, and I found myself middle aged, having spent my career thus far as a programmer, but never writing any assembly (let alone machine code), and having lost touch with the low level computing techniques that attracted me to programming in the first place.

So I decided to change that, and start a new project. My idea was to adapt the original Spectrum 128K ROM from Z80 machine code, to 64 bit ARM assembly, running on the Raspberry Pi 3B (which I happened to own). The idea was not to create an emulator (there are plenty of those around), but instead, to create a new “operating system” (probably monitor program would be accurate term) that had roughly the same feature set as the original Spectrum computers, but designed to run on modern hardware, at faster speeds, with higher resolution, more sophisticated sound etc.

What I loved about the original Spectrum, was the ease at which you could learn to program, and the simplicity of the platform. You did not need to study computer science for 30 years to understand it. That isn’t true with modern operating systems, they are much more complex. I wanted to create something simple and intuitive again, that would provide a similar computing experience. Said another way, I wanted to create something with the sophistication of the original spectrum, but that would run on modern hardware. Since it was meant to be an evolution of the Spectrum, I decided to call it the ZX Spectrum +4 (since the ZX Spectrum +3 was the last Spectrum that was ever made).

Well it is a work-in-progress, and has been a lot of fun to write so far. Please feel free to get involved with the project, and leave a comment, or open an issue or pull request against the repository. I think I have a fair bit of work to do, but it is doable. The original Spectrum ROMs were 16K each, so there is 32Kb of Z80 machine code and tables to translate, but given that instructions are variable length (typically 1, 2, or 3 bytes) there are probably something like 15,000 instructions to translate, which could be a year or two of hobby time, given my other commitments. Or less, if other people get involved! :-)

The github repo can be found here.

Open Source Alternative to ntrights.exe

If you wish to modify LSA policy programmatically on Windows, the

ntrights.exe utility from the Windows 2000 Server Resource Kit may help you.

But if you need to ship a product that uses it, you may wish to consider an

open source tool to avoid any licensing issues.

Needing to do a similar thing myself, I’ve written the ntr open source

utility for this purpose. It contains both a standalone executable (like

ntrights.exe) and a Go library interface.

The project is on github here.

I hope you find it useful!

May 06, 2020

Dustin Mitchell

Debugging Docker Connection Reset by Peer

(this post is co-written with @imbstack and cross-posted on his blog)

Symptoms

At the end of January this year the Taskcluster team was alerted to networking issues in a user’s tasks. The first

report involved ETIMEDOUT but later on it became clear that the more frequent issue was involving ECONNRESET in the middle of downloading artifacts necessary to

run the tests in the tasks. It seemed it was only occurring on downloads from Google (https://dl.google.com) on our workers running in GCP, and only with relatively large artifacts. This led

us to initially blame some bit of infrastructure outside of Taskcluster but eventually we found the issue to be with how Docker was handling networking on our worker machines.

Investigation

The initial stages of the investigation were focused on exploring possible causes of the error and on finding a way to reproduce the error.

Investigation of an intermittent error in a high-volume system like this is slow and difficult work. It’s difficult to know if an intervention fixed the issue just because the error does not recur. And it’s difficult to know if an intervention did not fix the issue, as “Connection reset by peer” can be due to transient network hiccups. It’s also difficult to gather data from production systems as the quantity of data per failure is unmanageably high.

We explored a few possible causes of the issue, all of which turned out to be dead ends.

- Rate Limiting or Abuse Prevention - The TC team has seen cases where downloads from compute clouds were limited as a form of abuse prevention. Like many CI processes, the WPT jobs download Chrome on every run, and it’s possible that a series of back-to-back tasks on the same worker could appear malicious to an abuse-prevention device.

- Outages of the download server - This was unlikely, given Google’s operational standards, but worth exploring since the issues seemed limited to

dl.google.com. - Exhaustion of Cloud NAT addresses - Resource exhaustion in the compute cloud might have been related. This was easily ruled out with the observation that workers are not using Cloud NAT.

At the same time, several of us were working on reproducing the issue in more controlled circumstances. This began with interactive sessions on Taskcluster workers, and soon progressed to a script that reproduced the issue easily on a GCP instance running the VM image used to run workers. An important observation here was that the issue only reproduced inside of a docker container: downloads from the host worked just fine. This seemed to affect all docker images, not just the image used in WPT jobs.

At this point, we were able to use Taskcluster itself to reproduce the issue at scale, creating a task group of identical tasks running the reproduction recipe. The “completed” tasks in that group are the successful reproductions.

Armed with quick, reliable reproduction, we were able to start capturing dumps of the network traffic. From these, we learned that the downloads were failing mid-download (tens of MB into a ~65MB file). We were also able to confirm that the error is, indeed, a TCP RST segment from the peer.

Searches for similar issues around this time found a blog post entitled “Fix a random network Connection Reset issue in Docker/Kubernetes”, which matched our issue in many respects. It’s a long read, but the summary is that conntrack, which is responsible for maintaining NAT tables in the Linux kernel, sometimes gets mixed up and labels a valid packet as INVALID. The default configuration of iptables forwarding rules is to ignore INVALID packets, meaning that they fall through to the default ACCEPT for the FILTER table. Since the port is not open on the host, the host replies with an RST segment. Docker containers use NAT to translate between the IP of the container and the IP of the host, so this would explain why the issue only occurs in a Docker container.

We were, indeed, seeing INVALID packets as revealed by conntrack -S, but there were some differences from our situation, so we continued investigating.

In particular, in the blog post, the connection errors are seen there in the opposite direction, and involved a local server for which the author had added some explicit firewall rules.

Since we hypothesized that NAT was involved, we captured packet traces both inside the Docker container and on the host interface, and combined the two. The results were pretty interesting! In the dump output below, 74.125.195.136 is dl.google.com, 10.138.0.12 is the host IP, and 172.17.0.2 is the container IP. 10.138.0.12 is a private IP, suggesting that there is an additional layer of NAT going on between the host IP and the Internet, but this was not the issue.

A “normal” data segment looks like

22:26:19.414064 ethertype IPv4 (0x0800), length 26820: 74.125.195.136.https > 10.138.0.12.60790: Flags [.], seq 35556934:35583686, ack 789, win 265, options [nop,nop,TS val 2940395388 ecr 3057320826], length 26752

22:26:19.414076 ethertype IPv4 (0x0800), length 26818: 74.125.195.136.https > 172.17.0.2.60790: Flags [.], seq 35556934:35583686, ack 789, win 265, options [nop,nop,TS val 2940395388 ecr 3057320826], length 26752

here the first line is outside the container and the second line is inside the container; the SNAT translation has rewritten the host IP to the container IP. The sequence numbers give the range of bytes in the segment, as an offset from the initial sequence number, so we are almost 34MB into the download (from a total of about 65MB) at this point.

We began by looking at the end of the connection, when it failed.

A

22:26:19.414064 ethertype IPv4 (0x0800), length 26820: 74.125.195.136.https > 10.138.0.12.60790: Flags [.], seq 35556934:35583686, ack 789, win 265, options [nop,nop,TS val 2940395388 ecr 3057320826], length 26752

22:26:19.414076 ethertype IPv4 (0x0800), length 26818: 74.125.195.136.https > 172.17.0.2.60790: Flags [.], seq 35556934:35583686, ack 789, win 265, options [nop,nop,TS val 2940395388 ecr 3057320826], length 26752

B

22:26:19.414077 ethertype IPv4 (0x0800), length 2884: 74.125.195.136.https > 10.138.0.12.60790: Flags [.], seq 34355910:34358726, ack 789, win 265, options [nop,nop,TS val 2940395383 ecr 3057320821], length 2816

C

22:26:19.414091 ethertype IPv4 (0x0800), length 56: 10.138.0.12.60790 > 74.125.195.136.https: Flags [R], seq 821696165, win 0, length 0

...

X

22:26:19.416605 ethertype IPv4 (0x0800), length 66: 172.17.0.2.60790 > 74.125.195.136.https: Flags [.], ack 35731526, win 1408, options [nop,nop,TS val 3057320829 ecr 2940395388], length 0

22:26:19.416626 ethertype IPv4 (0x0800), length 68: 10.138.0.12.60790 > 74.125.195.136.https: Flags [.], ack 35731526, win 1408, options [nop,nop,TS val 3057320829 ecr 2940395388], length 0

Y

22:26:19.416715 ethertype IPv4 (0x0800), length 56: 74.125.195.136.https > 10.138.0.12.60790: Flags [R], seq 3900322453, win 0, length 0

22:26:19.416735 ethertype IPv4 (0x0800), length 54: 74.125.195.136.https > 172.17.0.2.60790: Flags [R], seq 3900322453, win 0, length 0

Segment (A) is a normal data segment, forwarded to the container.

But (B) has a much lower sequence number, about 1MB earlier in the stream, and it is not forwarded to the docker container.

Notably, (B) is also about 1/10 the size of the normal data segments – we never figured out why that is the case.

Instead, we see an RST segment (C) sent back to dl.google.com.

This situation repeats a few times: normal segment forwarded, late segment dropped, RST segment sent to peer.

Finally, the docker container sends an ACK segment (X) for the segments it has received so far, and this is answered by an RST segment (Y) from the peer, and that RST segment is forwarded to the container. This final RST segment is reasonable from the peer’s perspective: we have already reset its connection, so by the time it gets (X) the connection has been destroyed. But this is the first the container has heard of any trouble on the connection, so it fails with “Connection reset by peer”.

So it seems that the low-sequence-number segments are being flagged as INVALID by conntrack and causing it to send RST segments. That’s a little surprising – why is conntrack paying attention to sequence numbers at all? From this article it appears this is a security measure, helping to protect sockets behind the NAT from various attacks on TCP.

The second surprise here is that such late TCP segments are present. Scrolling back through the dump output, there are many such packets – enough that manually labeling them is infeasible. However, graphing the sequence numbers shows a clear pattern:

Note that this covers only the last 16ms of the connection (the horizontal axis is in seconds), carrying about 200MB of data (the vertical axis is sequence numbers, indicating bytes). The “fork” in the pattern shows a split between the up-to-date segments, which seem to accelerate, and the delayed segments. The delayed segments are only slightly delayed - 2-3ms. But a spot-check of a few sequence ranges in the dump shows that they had already been retransmitted by the time they were delivered. When such late segments were not dropped by conntrack, the receiver replied to them with what’s known as a duplicate ACK, a form of selective ACK that says “I have received that segment, and in fact I’ve received many segments since then.”

Our best guess here is that some network intermediary has added a slight delay to some packets. But since the RTT on this connection is so short, that delay is relatively huge and puts the delayed packets outside of the window where conntrack is willing to accept them. That helps explain why other downloads, from hosts outside of the Google infrastructure, do not see this issue: either they do not traverse the intermediary delaying these packets, or the RTT is long enough that a few ms is not enough to result in packets being marked INVALID.

Resolution

After we posted these results in the issue, our users realized these symptoms looked a lot like a Moby libnetwork bug. We adopted a workaround mentioned there where we use conntrack to drop invalid packets in iptables rather than trigger RSTs

iptables -I INPUT -m conntrack --ctstate INVALID -j DROP

The drawbacks of that approach listed in the bug are acceptable for our uses. After baking a new machine images we tried to reproduce the issue at scale as we had done during the debugging of this issue and were not able to. We updated all of our worker pools to use this image the next day and it seems like we’re now in the clear.

Security Implications

As we uncovered this behavior, there was some concern among the team that this represented a security issue. When conntrack marks a packet as INVALID and it is handled on the host, it’s possible that the same port on the host is in use, and the packet could be treated as part of that connection. However, TCP identifies connections with a “four-tuple” of source IP and port + destination IP and port. But the tuples cannot match, or the remote end would have been unable to distinguish the connection “through” the NAT from the connection terminating on the host. So there is no issue of confusion between connections here.

However, there is the possibility of a denial of service. If an attacker can guess the four-tuple for an existing connection and forge an INVALID packet matching it, the resulting RST would destroy the connection. This is probably only an issue if the attacker is on the same network as the docker host, as otherwise reverse-path filtering would discard such a forged packet.

At any rate, this issue appears to be fixed in more recent distributions.

Thanks

@hexcles, @djmitche, @imbstack, @stephenmcgruer

December 14, 2018

Wander Lairson Costa

Running packet.net images in qemu

For the past months, I have been working on adding Taskcluster support for packet.net cloud provider. The reason for that is to get faster Firefox for Android CI tests. Tests showed that jobs run up to 4x faster on bare metal machines than EC2.

I set up 25 machines to run a small subset of the production tasks, and so far results are excellent. The problem is that those machines are up 24/7 and there is no dynamic provisioning. If we need more machines, I have to manually change the terraform script to scale it up. We need a smart way to do that. We are going to build something similar as aws-provisioner. However, we need a custom packet.net image to speed up instance startup.

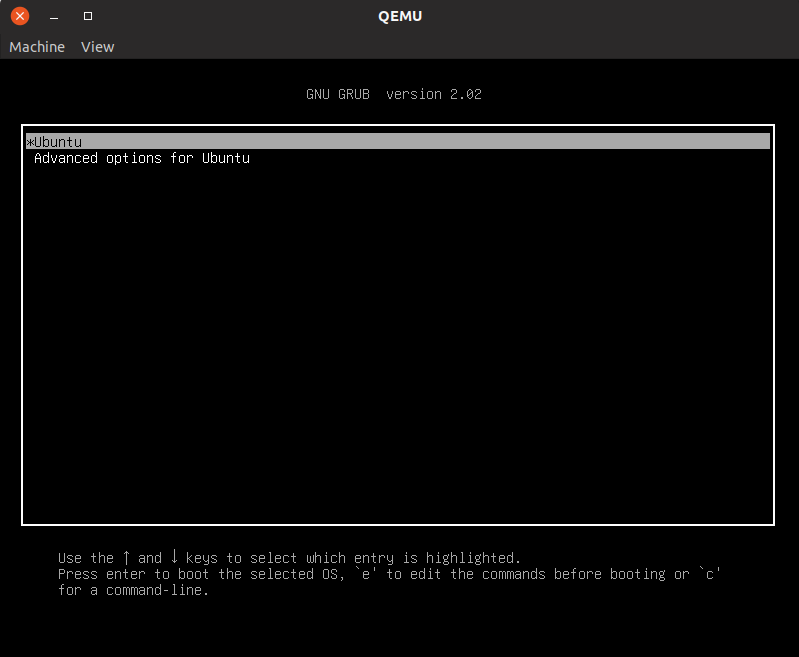

The problem is that if you can’t ssh into the machine, there is no way to get access to it to see what’s wrong. In this post,l I am going to show how you can run a packet image locally with qemu.

You can find documentation about creating custom packet images here and here.

Let’s create a sample image for the post. After you clone the packet-images repo, run:

$ ./tools/build.sh -d ubuntu_14_04 -p t1.small.x86 -a x86_64 -b ubuntu_14_04-t1.small.x86-dev

This creates the image.tar.gz file, which is your packet image.

The goal of this post is not to guide you on creating your custom image; you can refer

to the documentation linked above for this. The goal here is, once you have your

image, how you can run it locally with qemu.

The first step is to create a qemu disk to install the image into it:

$ qemu-img create -f raw linux.img 10G

This command creates a raw qemu image. We now need to create a disk partition:

$ cfdisk linux.img

Select dos for the partition table, create a single primary partition and

make it bootable. We now need to create a loop device to handle our image:

$ sudo losetup -Pf linux.img

The -f option looks for the first free loop device for attachment to the image file.

The -P option instructs losetup to read the partition table and create a loop

device for each partition found; this avoids we having to play with disk

offsets. Now let’s find our loop device:

$ sudo losetup -l

NAME SIZELIMIT OFFSET AUTOCLEAR RO BACK-FILE DIO LOG-SEC

/dev/loop1 0 0 1 1 /var/lib/snapd/snaps/gnome-calculator_260.snap 0 512

/dev/loop8 0 0 1 1 /var/lib/snapd/snaps/gtk-common-themes_818.snap 0 512

/dev/loop6 0 0 1 1 /var/lib/snapd/snaps/core_5662.snap 0 512

/dev/loop4 0 0 1 1 /var/lib/snapd/snaps/gtk-common-themes_701.snap 0 512

/dev/loop11 0 0 1 1 /var/lib/snapd/snaps/gnome-characters_139.snap 0 512

/dev/loop2 0 0 1 1 /var/lib/snapd/snaps/gnome-calculator_238.snap 0 512

/dev/loop0 0 0 1 1 /var/lib/snapd/snaps/gnome-logs_45.snap 0 512

/dev/loop9 0 0 1 1 /var/lib/snapd/snaps/core_6034.snap 0 512

/dev/loop7 0 0 1 1 /var/lib/snapd/snaps/gnome-characters_124.snap 0 512

/dev/loop5 0 0 1 1 /var/lib/snapd/snaps/gnome-3-26-1604_70.snap 0 512

/dev/loop12 0 0 0 0 /home/walac/work/packet-images/linux.img 0 512

/dev/loop3 0 0 1 1 /var/lib/snapd/snaps/gnome-system-monitor_57.snap 0 512

/dev/loop10 0 0 1 1 /var/lib/snapd/snaps/gnome-3-26-1604_74.snap 0 512

We see that our loop device is /dev/loop12. If we look in the /dev directory:

$ ls -l /dev/loop12*

brw-rw---- 1 root 7, 12 Dec 17 10:39 /dev/loop12

brw-rw---- 1 root 259, 0 Dec 17 10:39 /dev/loop12p1

We see that, thanks to the -P option, losetup created the loop12p1

device for the partition we have. It is time to set up the filesystem:

$ sudo mkfs.ext4 -b 1024 /dev/loop12p1

mke2fs 1.44.4 (18-Aug-2018)

Discarding device blocks: done

Creating filesystem with 10484716 1k blocks and 655360 inodes

Filesystem UUID: 2edfe9f2-7e90-4c35-80e2-bd2e49cad251

Superblock backups stored on blocks:

8193, 24577, 40961, 57345, 73729, 204801, 221185, 401409, 663553,

1024001, 1990657, 2809857, 5120001, 5971969

Allocating group tables: done

Writing inode tables: done

Creating journal (65536 blocks): done

Writing superblocks and filesystem accounting information: done

Ok, finally we can mount our device and extract the image to it:

$ mkdir mnt

$ sudo mount /dev/loop12p1 mnt/

$ sudo tar -xzf image.tar.gz -C mnt/

The last step is to install the bootloader. As we are running an Ubuntu image, we will use grub2 for that.

Firstly we need to install grub in the boot sector:

$ sudo grub-install --boot-directory mnt/boot/ /dev/loop12

Installing for i386-pc platform.

Installation finished. No error reported.

Notice we point to the boot directory of our image. Next, we have to

generate the grub.cfg file:

$ cd mnt/

$ for i in /proc /dev /sys; do sudo mount -B $i .$i; done

$ sudo chroot .

# cd /etc/grub.d/

# chmod -x 30_os-prober

# update-grub

Generating grub configuration file ...

Warning: Setting GRUB_TIMEOUT to a non-zero value when GRUB_HIDDEN_TIMEOUT is set is no longer supported.

Found linux image: /boot/vmlinuz-3.13.0-123-generic

Found initrd image: /boot/initrd.img-3.13.0-123-generic

done

We bind mount the /dev, /proc and /sys host mount points inside the Ubuntu image,

then chroot into it. Next, to avoid grub creating entries for our host OSes, we disable the

30_os-prober script. Finally we run update-grub and it creates the /boot/grub/grub.cfg file.

Now the only thing left is cleanup:

# exit

$ for i in dev/ sys/ proc/; do sudo umount $i; done

$ cd ..

$ sudo umount mnt/

The commands are self explanatory. Now let’s run our image:

$ sudo qemu-system-x86_64 -enable-kvm -hda /dev/loop12

And that’s it, you now can run your packet image locally!

August 29, 2018

John Ford

Shrinking Go Binaries

A bit of background is that Go binaries are static binaries which have the Go runtime and standard library built into them. This is great if you don't care about binary size but not great if you do.

To reproduce my results, you can do the following:

go get -u -t -v github.com/taskcluster/taskcluster-lib-artifact-go cd $GOPATH/src/github.com/taskcluster/taskcluster-lib-artifact-go git checkout 6f133d8eb9ebc02cececa2af3d664c71a974e833 time (go build) && wc -c ./artifact time (go build && strip ./artifact) && wc -c ./artifact time (go build -ldflags="-s") && wc -c ./artifact time (go build -ldflags="-w") && wc -c ./artifact time (go build -ldflags="-s -w") && wc -c ./artifact time (go build && upx -1 ./artifact) && wc -c ./artifact time (go build && upx -9 ./artifact) && wc -c ./artifact time (go build && strip ./artifact && upx -1 ./artifact) && wc -c ./artifact time (go build && strip ./artifact && upx --brute ./artifact) && wc -c ./artifact time (go build && strip ./artifact && upx --ultra-brute ./artifact) && wc -c ./artifact time (go build && strip && upx -9 ./artifact) && wc -c ./artifact

Since I was removing a lot of debugging information, I figured it'd be worthwhile checking that stack traces are still working. To ensure that I could definitely crash, I decided to panic with an error immediately on program startup.

Even with binary stripping and the maximum compression, I'm still able to get valid stack traces. A reduction from 9mb to 2mb is definitely significant. The binaries are still large, but they're much smaller than what we started out with. I'm curious if we can apply this same configuration to other areas of the Taskcluster Go codebase with similar success, and if the reduction in size is worthwhile there.

I think that using strip and upx -9 is probably the best path forward. This combination provides enough of a benefit over the non-upx options that the time tradeoff is likely worth the effort.

August 28, 2018

John Ford

Taskcluster Artifact API extended to support content verification and improve error detection

Background

At Mozilla, we're developing the Taskcluster environment for doing Continuous Integration, or CI. One of the fundamental concerns in a CI environment is being able to upload and download files created by each task execution. We call them artifacts. For Mozilla's Firefox project, an example of how we use artifacts is that each build of Firefox generates a product archive containing a build of Firefox, an archive containing the test files we run against the browser and an archive containing the compiler's debug symbols which can be used to generate stacks when unit tests hit an error.The problem

In the old Artifact API, we had an endpoint which generated a signed S3 url that was given to the worker which created the artifact. This worker could upload anything it wanted at that location. This is not to suggest malicious usage, but that any errors or early termination of uploads could result in a corrupted artifact being stored in S3 as if it were a correct upload.If you created an artifact with the local contents "hello-world\n", but your internet connection dropped midway through, the S3 object might only contain "hello-w". This went silent and uncaught until something much later down the pipeline (hopefully!) complained that the file it got was corrupted. This corruption is the cause of many orange-factor bugs, but we have no way to figure out exactly where the corruption is happening.

Our old API was also very challenging to use and artifact handling in tasks. It would often require a task writer to use one of our client libraries to generate a Taskcluster-Signed-URL and Curl to do uploads. For a lot of cases, this is really hazard fraught. Curl doesn't fail on errors by default (!!!), Curl doesn't automatically handle "Content-Encoding: gzip" responses without "Accept: gzip", which we sometimes need to serve. It requires each user figure all of this out for themselves, each time they want to use artifacts.

We also had a "Completed Artifact" pulse message which isn't actually sending anything useful. It would send a message when the artifact is allocated in our metadata tables, not when the artifact was actually complete. We could mark a task as being completed before all of the artifacts were finished being uploaded. In practice, this was avoided by avoiding a call to complete the task before the uploads were done, but it was a convention.

Our solution

We wanted to address a lot of issues with Taskcluster Artifacts. Specifically the following issues are ones which we've tackled:- Corruption during upload should be detected

- Corruption during download should be detected

- Corruption of artifacts should be attributable

- S3 Eventual Consistency error detection

- Caches should be able to verify whether they are caching valid items

- Completed Artifact messages should only be sent when the artifact is actually complete

- Tasks should be unresolvable until all uploads are finished

- Artifacts should be really easy to use

- Artifacts should be able to be uploaded with browser-viewable gzip encoding

Code

Here's the code we wrote for this project:- https://github.com/taskcluster/remotely-signed-s3 -- A library which wraps the S3 APIs using the lower level S3 REST Api and uses the aws4 request signing library

- https://github.com/taskcluster/taskcluster-lib-artifact -- A light wrapper around remotely-signed-s3 to enable JS based uploads and downloads

- https://github.com/taskcluster/taskcluster-lib-artifact-go -- A library and CLI written in Go

- https://github.com/taskcluster/taskcluster-queue/commit/6cba02804aeb05b6a5c44134dca1df1b018f1860 -- The final Queue patch to enable the new Artifact API

Upload Corruption

If an artifact is uploaded with a different set of bytes to those which were expected, we should fail the upload. The S3 V4 signatures system allows us to sign a request's headers, which includes an X-Amz-Content-Sha256 and Content-Length header. This means that the request headers we get back from signing can only be used for a request which sets the X-Amz-Content-Sha256 and Content-Length to the value provided at signing. The S3 library checks that the body of each request's Sha256 checksum matches the value provided in this header and also the Content-Length.The requests we get from the Taskcluster Queue can only be used to upload the exact file we asked permission to upload. This means that the only set of bytes that will allow the request(s) to S3 to complete sucessfully will be the ones we initially told the Taskcluster Queue about.

The two main cases we're protecting against here are disk and network corruption. The file ends up being read twice, once to hash and once to upload. Since we have the hash calculated, we can be sure to catch corruption if the two hashes or sizes don't match. Likewise, the possibility of network interuption or corruption is handled because the S3 server will report an error if the connection is interupted or corrupted before data matching the Sha256 hash exactly is uploaded.

This does not protect against all broken files from being uploaded. This is an important distinction to make. If you upload an invalid zip file, but no corruption occurs once you pass responsibility to taskcluster-lib-artifact, we're going to happily store this defective file, but we're going to ensure that every step down the pipeline gets an exact copy of this defective file.

Download Corruption

Like corruption during upload, we could experience corruption or interruptions during downloading. In order to combat this, we set some metadata on the artifacts in S3. We set some extra headers during uploading:- x-amz-meta-taskcluster-content-sha256 -- The Sha256 of the artifact passed into a library -- i.e. without our automatic gzip encoding

- x-amz-meta-taskcluster-content-length -- The number of bytes of the artifact passed into a library -- i.e. without our automatic gzip encoding

- x-amz-meta-taskcluster-transfer-sha256 -- The Sha256 of the artifact as passed over the wire to S3 servers. In the case of identity encoding, this is the same value as x-amz-meta-taskcluster-content-sha256. In the case of Gzip encoding, it is almost certainly not identical.

- x-amz-meta-taskcluster-transfer-length -- The number of bytes of the artifact as passed over the wire to S3 servers. In the case of identity encoding, this is the same value as x-amz-meta-taskcluster-content-sha256. In the case of Gzip encoding, it is almost certainly not identical.

Important to note is that because these are non-standard headers, verification requires explicit action on the part of the artifact downloader. That's a big part of why we've written supported artifact downloading tools.

Attribution of Corruption

Corruption is inevitable in a massive system like Taskcluster. What's really important is that when corruption happens we detect it and we know where to focus our remediation efforts. In the new Artifact API, we can zero in on the culprit for corruption.With the old Artifact API, we don't have any way to figure out if an artifact is corrupted or where that happened. We never know what the artifact was on the build machine, we can't verify corruption in caching systems and when we have an invalid artifact downloaded on a downstream task, we don't know whether it is invalid because the file was defective from the start or if it was because of a bad transfer.

Now, we know that if the Sha256 checksums of the downloaded artifact, the original file was broken before it was uploaded. We can build caching systems which ensure that the value that they're caching is valid and alert us to corruption. We can track corruption to detect issues in our underlying infrastructure.

Completed Artifact Messages and Task Resolution

Previously, as soon as the Taskcluster Queue stored the metadata about the artifact in its internal tables and generated a signed url for the S3 object, the artifact was marked as completed. This behaviour resulted in a slightly deceptive message being sent. Nobody cares when this allocation occurs, but someone might care about an artifact becoming available.On a related theme, we also allowed tasks to be resolved before the artifacts were uploaded. This meant that a task could be marked as "Completed -- Success" without actually uploading any of its artifacts. Obviously, we would always be writing workers with the intention of avoiding this error, but having it built into the Queue gives us a stronger guarantee.

We achieved this result by adding a new method to the flow of creating and uploading an artifact and adding a 'present' field in the Taskcluster Queue's internal Artifact table. For those artifacts which are created atomically, and the legacy S3 artifacts, we just set the value to true. For the new artifacts, we set it to false. When you finish your upload, you have to run a complete artifact method. This is sort of like a commit.

In the complete artifact method, we verify that S3 sees the artifact as present and only once it's completed do we send the artifact completed method. Likewise, in the complete task method, we ensure that all artifacts have a present value of true before allowing the task to complete.

S3 Eventual Consistency and Caching Error Detection

S3 works on an Eventual consistency model for some operations in some regions. Caching systems also have a certain level of tolerance for corruption. We're now able to determine whether the bytes we're downloading are those which we expect. We can now rely on more than http status code to know whether the request worked.In both of these cases we can programmatically check if the download is corrupt and try again as appropriate. In the future, we could even build smarts into our download libraries and tools to request caches involved to drop their data or try bypassing caches as a last result.

Artifacts should be easy to use

Right now, if your working with artifacts directly, you're probably having a hard time. You have to use something like Curl and building urls or signed urls. You've probably hit pitfalls like Curl not exiting with an error on a non-200 HTTP Status. You're not getting any content verification. Basically, it's hard.Taskcluster is about enabling developers to do their job effectively. Something so critical to CI usage as artifacts should be simple to use. To that end, we've implemented libraries for interacting with artifacts in Javascript and Go. We've also implemented a Go based CLI for interacting with artifacts in the build system or shell scripts.

Javascript

The Javascript client uses the same remotely-signed-s3 library that the Taskcluster Queue uses internally. It's a really simple wrapper which provides an put() and get() interface. All of the verification of requests is handled internally, as is decompression of Gzip resources. This was primarily written to enable integration in Docker-Worker directly.Go

We also provide a Go library for downloading and uploading artifacts. This is intended to be used in the Generic-Worker, which is written in Go. The Go Library uses the minimum useful interface in the Standard I/O library for inputs and outputs. We're also doing type assertions to do even more intelligent things on those inputs and outputs which support it.CLI

For all other users of Artifacts, we provide a CLI tool. This provides a simple interface to interact with artifacts. The intention is to make it available in the path of the task execution environment, so that users can simply call "artifact download --latest $taskId $name --output browser.zip.Artifacts should allow serving to the browser in Gzip

We want to enable large text files which compress extremely well with Gzip to be rendered by web browsers. An example is displaying and transmitting logs. Because of limitations in S3 around Content-Encoding and its complete lack of content negotiation, we have to decide when we upload an artifact whether or not it should be Gzip compressed.There's an option in the libraries to support automatic Gzip compression of things we're going to upload. We chose Gzip over possibly-better encoding schemes because this is a one time choice at upload time, so we wanted to make sure that the scheme we used would be broadly implemented.

Further Improvements

As always, there's still some things around artifact handling that we'd like to improve upon. For starters, we should work on splitting artifact handling out of our Queue. We've already agreed on a design of how we should store artifacts. This involves splitting out all of the artifact handling out of the Queue into a different service and having the Queue track only which artifacts belong to each task run.We're also investigting an idea to store each artifact in the region it is created in. Right now, all artifacts are stored in EC2's US West 2 region. We could have a situation where a build vm and test vm are running on the same hypervisor in US East 1, but each artifact has to be upload and downloaded via US West 2.

Another area we'd like to work on is supporting other clouds. Taskcluster ideally supports whichever cloud provider you'd like to use. We want to support other storage providers than S3, and splitting out the low level artifact handling gives us a huge maintainability win.

Possible Contributions

We're always open to contributions! A great one that we'd love to see is allowing concurrency of multipart uploads in Go. It turns out that this is a lot more complicated than I'd like it to be in order to support passing in the low level io.Reader interface. We'd want to do some type assertions to see if the input supports io.ReaderAt, and if not, use a per-go-routine offset and file mutex to guard around seeking on the file. I'm happy to mentor this project, so get in touch if that's something you'd like to work on.Conclusion

This project has been a really interesting one for me. It gave me an opportunity to learn the Go programming language and work with the underlying AWS Rest API. It's been an interesting experience after being heads down in Node.js code and has been a great reminder of how to use static, strongly typed languages. I'd forgotten how nice a real type system was to work with!Integration into our workers is still ongoing, but I wanted to give an overview of this project to keep everyone in the loop. I'm really excited to see a reduction in the amount of corruptions for artifacts

August 22, 2018

Dustin Mitchell

Introducing CI-Admin

A major focus of recent developments in Firefox CI has been putting control of the CI process in the hands of the engineers working on the project. For the most part, that means putting configuration in the source tree. However, some kinds of configuration don’t fit well in the tree. Notably, configuration of the trees themselves must reside somewhere else.

CI-Configuration

This information is collected in the ci-configuration repository.

This is a code-free library containing YAML files describing various aspects of the configuration – currently the available repositories (projects.yml) and actions.

This repository is designed to be easy to modify by anyone who needs to modify it, through the usual review processes. It is even Phabricator-enabled!

CI-Admin

Historically, we’ve managed this sort of configuration by clicking around in the Taskcluster Tools. The situation is analogous to clicking around in the AWS console to set up a cloud deployment – it works, and it’s quick and flexible. But it gets harder as the configuration becomes more complex, it’s easy to make a mistake, and it’s hard to fix that mistake. Not to mention, the tools UI shows a pretty low-level view of the situation that does not make common questions (“Is this scope available to cron jobs on the larch repo?”) easy to answer.

The devops world has faced down this sort of problem, and the preferred approach is embodied in tools like Puppet or Terraform:

- write down the desired configuration in a human-parsable text files

- check it into a repository and use the normal software-development processes (CI, reviews, merges..)

- apply changes with a tool that enforces the desired state

This “desired state” approach means that the tool examines the current configuration, compares it to the configuration expressed in the text files, and makes the necessary API calls to bring the current configuration into line with the text files. Typically, there are utilities to show the differences, partially apply the changes, and so on.

The ci-configuration repository contains those human-parsable text files.

The tool to enforce that state is ci-admin.

It has some generic resource-manipulation support, along with some very Firefox-CI-specific code to do weird things like hashing .taskcluster.yml.

Making Changes

The current process for making changes is a little cumbersome. In part, that’s intentional: this tool controls the security boundaries we use to separate try from release, so its application needs to be carefully controlled and subject to significant human review. But there’s also some work to do to make it easier (see below).

The process is this:

- make a patch to either or both repos, and get review from someone in the “Build Config - Taskgraph” module

- land the patch

- get someone with the proper access to run

ci-admin applyfor you (probably the reviewer can do this)

Future Plans

Automation

We are in the process of setting up some automation around these repositories. This includes Phabricator, Lando, and Treeherder integration, along with automatic unit test runs on push.

More specific to this project, we also need to check that the current and expected configurations match.

This needs to happen on any push to either repo, but also in between pushes: someone might make a change “manually”, or some of the external data sources (such as the Hg access-control levels for a repo) might change without a commit to the ci-configuration repo.

We will do this via a Hook that runs ci-admin diff periodically, notifying relevant people when a difference is found.

These results, too, will appear in Treeherder.

Grants

One of the most intricate and confusing aspects of configuration for Firefox CI is the assignment of scopes to various jobs.

The current implementation uses a cascade of role inheritance and * suffixes which, frankly, no human can comprehend.

The new plan is to “grant” scopes to particular targets in a file in ci-configuration.

Each grant will have a clear purpose, with accompanying comments if necessary.

Then, ci-admin will gather all of the grants and combine them into the appropriate role definitions.

Worker Configurations

At the moment, the configuration of, say, aws-provsioner-v1/gecko-t-large is a bit of a mystery.

It’s visible to some people in the AWS-provisioner tool, if you know to look there.

But that definition also contains some secret data, so it is not publicly visible like roles or hooks are.

In the future, we’d like to generate these configurations based on ci-configuration.

That both makes it clear how a particular worker type is configured (instance type, capacity configuration, regions, disk space, etc.), and allows anyone to propose a modification to that configuration – perhaps to try a new instance type.

Terraform Provider

As noted above, ci-admin is fairly specific to the needs of Firefox CI.

Other users of Taskcluster would probably want something similar, although perhaps a bit simpler.

Terraform is already a popular tool for configuring cloud services, and supports plug-in “providers”.

It would not be terribly difficult to write a terraform-provider-taskcluster that can create roles, hooks, clients, and so on.

This is left as an exercise for the motivated user!

Links

June 15, 2018

Dustin Mitchell

Actions as Hooks

You may already be familiar with in-tree actions: they allow you to do things like retrigger, backfill, and cancel Firefox-related tasks

They implement any “action” on a push that occurs after the initial hg push operation.

This article goes into a bit of detail about how this works, and a major change we’re making to that implementation.

History

Until very recently, actions worked like this:

First, the decision task (the task that runs in response to a push and decides what builds, tests, etc. to run) creates an artifact called actions.json.

This artifact contains the list of supported actions and some templates for tasks to implement those actions.

When you click an action button (in Treeherder or the Taskcluster tools, or any UI implementing the actions spec), code running in the browser renders that template and uses it to create a task, using your Taskcluster credentials.

I talk a lot about functionality being in-tree. Actions are yet another example. Actions are defined in-tree, using some pretty straightforward Python code. That means any engineer who wants to change or add an action can do so – no need to ask permission, no need to rely on another engineer’s attention (aside from review, of course).

There’s Always a Catch: Security

Since the beginning, Taskcluster has operated on a fairly simple model: if you can accomplish something by pushing to a repository, then you can accomplish the same directly. At Mozilla, the core source-code security model is the SCM level: try-like repositories are at level 1, project (twice) repositories at level 2, and release-train repositories (autoland, central, beta, etc.) are at level 3. Similarly, LDAP users may have permisison to push to level 1, 2, or 3 repositories. The current configuration of Taskcluster assigns the same scopes to users at a particular level as it does to repositories.

If you have such permission, check out your scopes in the Taskcluster credentials tool (after signing in). You’ll see a lot of scopes there.

The Release Engineering team has made release promotion an action. This is not something that every user who can push to level-3 repository – hundreds of people – should be able to do! Since it involves signing releases, this means that every user who can push to a level-3 repository has scopes involved in signing a Firefox release. It’s not quite as bad as it seems: there are lots of additional safeguards in place, not least of which is the “Chain of Trust” that cryptographically verifies the origin of artifacts before signing.

All the same, this is something we (and the Firefox operations security team) would like to fix.

In the new model, users will not have the same scopes as the repositories they can push to. Instead, they will have scopes to trigger specific actions on task-graphs at specific levels. Some of those scopes will be available to everyone at that level, while others will be available only to more limited groups. For example, release promotion would be available to the Release Management team.

Hooks

This makes actions a kind of privilege escalation: something a particular user can cause to occur, but could not do themselves.

The Taskcluster-Hooks service provides just this sort of functionality:

a hook creates a task using scopes assiged by a role, without requiring the user calling triggerHook to have those scopes.

The user must merely have the appropriate hooks:trigger-hook:.. scope.

So, we have added a “hook” kind to the action spec.

The difference from the original “task” kind is that actions.json specifies a hook to execute, along with well-defined inputs to that hook.

The user invoking the action must have the hooks:trigger-hook:.. scope for the indicated hook.

We have also included some protection against clickjacking, preventing someone with permission to execute a hook being tricked into executing one maliciously.

Generic Hooks

There are three things we may wish to vary for an action:

- who can invoke the action;

- the scopes with which the action executes; and

- the allowable inputs to the action.

Most of these are configured within the hooks service (using automation, of course). If every action is configured uniquely within the hooks service, then the self-service nature of actions would be lost: any new action would require assistance from someone with permission to modify hooks.

As a compromise, we noted that most actions should be available to everyone who can push to the corresponding repo, have fairly limited scopes, and need not limit their inputs. We call these “generic” actions, and creating a new such action is self-serve. All other actions require some kind of external configuration: allocating the scope to trigger the task, assigning additional scopes to the hook, or declaring an input schema for the hook.

Hook Configuration

The hook definition for an action hook is quite complex: it involves a complex task definition template as well as a large schema for the input to triggerHook.

For decision tasks, cron tasks, an “old” actions, this is defined in .taskcluster.yml, and we wanted to continue that with hook-based actions.

But this creates a potential issue: if a push changes .taskcluster.yml, that push will not automatically update the hooks – such an update requires elevated privileges and must be done by someone who can sanity-check the operation.

To solve this, ci-admin creates tasks hooksed on the .taskcluster.yml it finds in any Firefox repository, naming each after a hash of the file’s content.

Thus, once a change is introduced, it can “ride the trains”, using the same hash in each repository.

Implementation and Implications

As of this writing, two common actions are operating as hooks: retrigger and backfill. Both are “generic” actions, so the next step is to start to implement some actions that are not generic. Ideally, nobody notices anything here: it is merely an implementation change.

Once all actions have been converted to hooks, we will begin removing scopes from users. This will have a more significant impact: lots of activities such as manually creating tasks (including edit-and-create) will no longer be allowed. We will try to balance the security issues against user convenience here. Some common activities may be implemented as actions (such as creating loaners). Others may be allowed as exceptions (for example, creating test tasks). But some existing workflows may need to change to accomodate this improvement.

We hope to finish the conversion process in July 2018, with that time largely taken with a slow rollout to accomodate unforseen implications. When the project is finished, Firefox releases and other sensitive operations will be better-protected, with minimal impact to developers’ existing worflows.

May 21, 2018

Dustin Mitchell

Redeploying Taskcluster: Hosted vs. Shipped Software

The Taskcluster team’s work on redeployability means switching from a hosted service to a shipped application.

A hosted service is one where the authors of the software are also running the main instance of that software. Examples include Github, Facebook, and Mozillians. By contrast, a shipped application is deployed multiple times by people unrelated to the software’s authors. Examples of shipped applications include Gitlab, Joomla, and the Rust toolchain. And, of course, Firefox!

Hosted Services

Operating a hosted service can be liberating. Blog posts describe the joys of continuous deployment – even deploying the service multiple times per day. Bugs can be fixed quickly, either by rolling back to a previous deployment or by deploying a fix.

Deploying new features on a hosted service is pretty easy, too. Even a complex change can be broken down into phases and accomplished without downtime. For example, changing the backend storage for a service can be accomplished by modifying the service to write to both old and new backends, mirroring existing data from old to new, switching reads to the new backend, and finally removing the code to write to the old backend. Each phase is deployed separately, with careful monitoring. If anything goes wrong, rollback to the old backend is quick and easy.

Hosted service developers are often involved with operation of the service, and operational issues can frequently be diagnosed or even corrected with modifications to the software. For example, if a service is experiencing performance issues due to particular kinds of queries, a quick deployment to identify and reject those queries can keep the service up, followed by a patch to add caching or some other approach to improve performance.

Shipped Applications

A shipped application is sent out into the world to be used by other people. Those users may or may not use the latest version, and certainly will not update several times per day (the heroes running Firefox Nightly being a notable exception). So, many versions of the application will be running simultaneously. Some applications support automatic updates, but many users want to control when – and if – they update. For example, upgrading a website built with a CMS like Joomla is a risky operation, especially if the website has been heavily customized.