If you were to ask my parents or sister what my favourite hobby was as a child, they’d say something along the lines of “sitting in front of our family computer”. I’d spend hours browsing the internet, usually playing Flash games or watching early YouTube videos. Most of my memories of using the computer are now a blur, however, one detail stands out. I distinctly remember that our family computer used Mozilla Firefox as our primary internet browser. So imagine my surprise when I was offered an opportunity to intern here at Mozilla!

In the midst of my third year studying Computer Engineering at the University of Toronto, I had been searching for a 12-month internship to complete our Professional Experience Year (PEY) Co-op credit. Incredibly, I landed the privilege of working at Mozilla for 12 months alongside 17 other students. Coincidentally, one of my closest friends from high school would also be completing his internship at Mozilla too!

As a Software Engineer (SWE) Intern, I had been hired on the Localization (L10N) team, and would be based out of the Toronto office. I had already connected with both my manager, Francesco “Flod” Lodolo, and my mentor, Matjaž Horvat, before my start date. I couldn’t wait to begin my internship, and after I finished my final exam for third year, I began counting the days before my start date.

LGTM! (Onboarding)

From our first day at the office, I knew I was going to love working here. The Toronto office is so vibrant and filled with some truly amazing people! After finishing the office tour with the rest of the interns, we booted up our computers and began installing all our tools. Luckily for me, Ayanaa (who was the previous SWE Intern on the Localization team) was in the office too. She would be here until the end of August, helping to mentor and guide me along the way.

With her help, I got started on some bug fixes in Pontoon, Mozilla’s translation management system. I was mainly using Python (specifically the Django framework) and JavaScript/TypeScript (React) for the duration of the internship. Since I had some prior internship experience with these tools, I was able to hit the ground running, and by the end of my third month I had already completed 12 tickets! Matjaž and Flod were both instrumental in my progress, and with their help, I narrowed down the larger projects I wanted to work on for the rest of my internship.

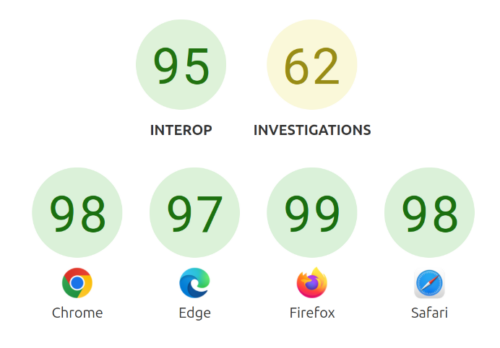

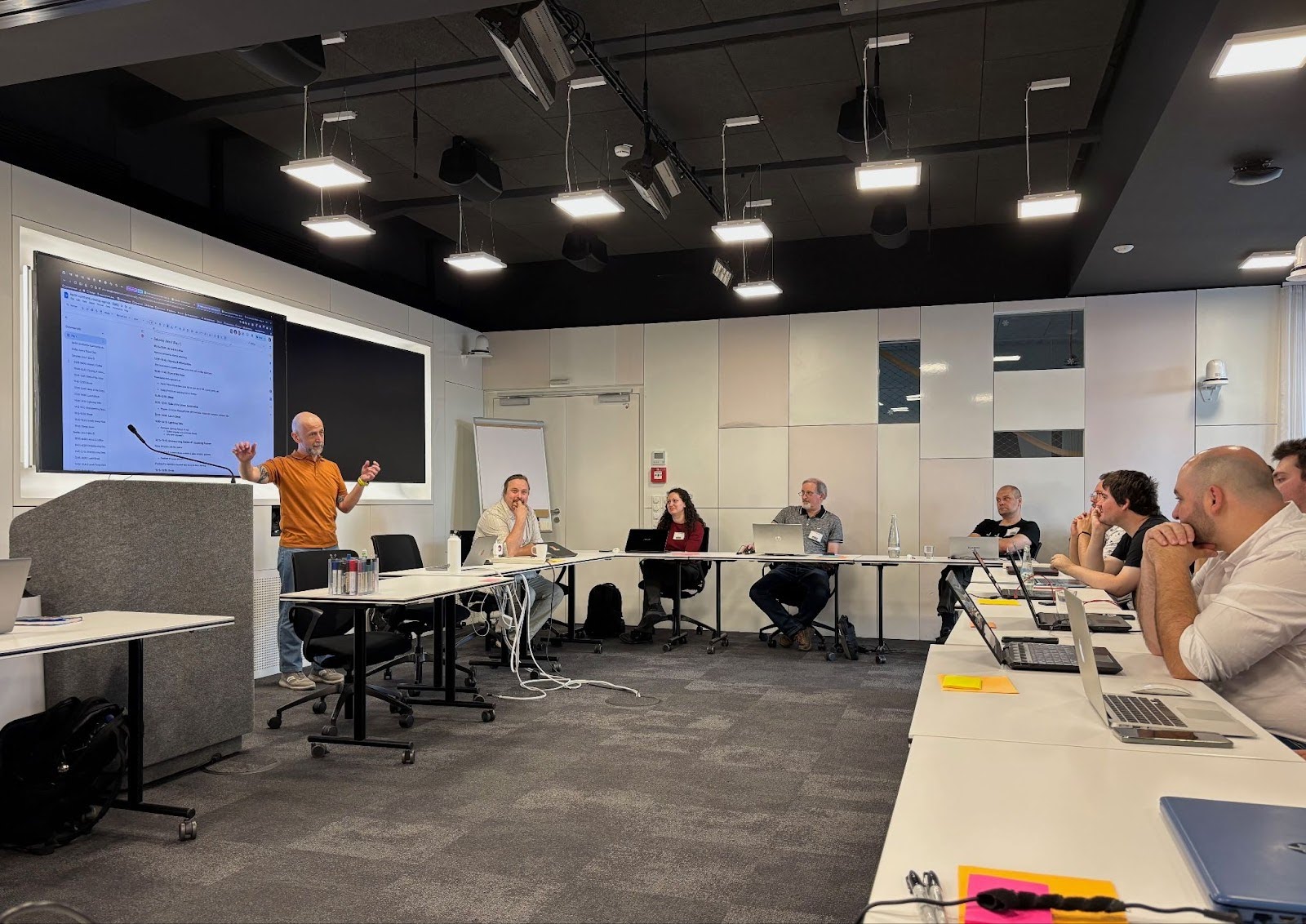

I also took an interest in web standards within my first few months. Eemeli, the other engineer on our team, was an active contributor to the MessageFormat2 API, a new Unicode standard for localization. With his support, I was able to attend the Working Group’s weekly meetings. These meetings included some of the most influential and experienced people in this domain, spanning across many large companies and organizations.

Our first day tour of the Toronto office!

Coast to Continent to Coast (MozWeek and Work Week)

Around the middle of August, we were given the opportunity to attend MozWeek 2024, which is our annual week-long, company-wide conference. MozWeek 2024 was being held in Dublin, Ireland, so this was my first time ever travelling to Europe! From day one, the atmosphere at The Convention Centre Dublin was electric. I could tell a lot of thought, planning, and care went into creating the best possible experience for all employees. Throughout the week, we attended plenary talks, workshops, and strategic meetings.

Seeing how Mozilla is a remote-first international company, this was the first time I had met any of my full-time colleagues in person. It was so nice to finally see and chat with them outside my laptop screen. We even had our team dinner next to the famous Temple Bar! In our free time, the other interns and I had a blast walking through the streets of Dublin, and exploring what Ireland has to offer.

The interns and I at the MozWeek 2024 Closing Party, hosted at the Guinness Storehouse.

Dublin wasn’t my only travel destination though. Each team meets up once a year in one of Mozilla’s many office spaces across the world. Owing to our remote-first policy, these ‘Work Weeks’ are an opportunity for teams to reflect on the past year and align on OKRs for the coming year. Our Work Week happened in November, in sunny San Mateo, California, marking my first time on the West Coast! The Work Week was a great experience filled with good food, and it was super fun to explore San Francisco in my free time.

L10N team dinner at Porterhouse Restaurant San Mateo!

Building for a Better Web (Projects Overview)

One of my favourite parts of working at Mozilla was that almost all of my work was public-facing. I worked on three major projects during my internship, so here’s a brief description of each:

Pontoon Search

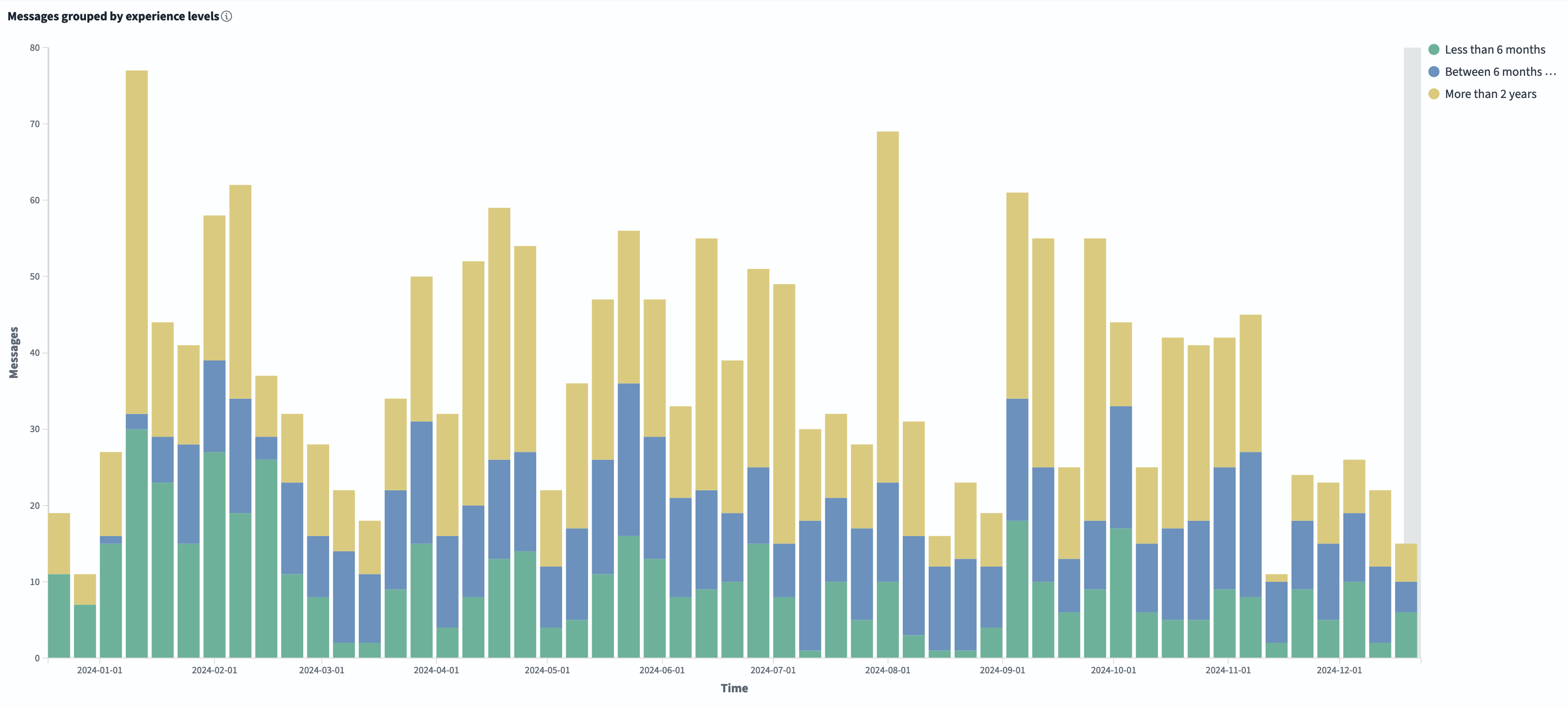

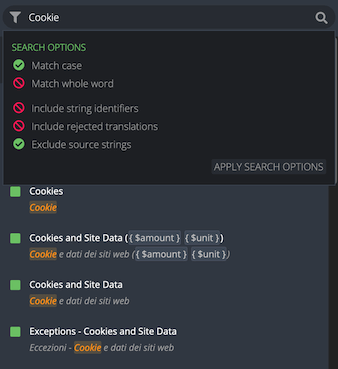

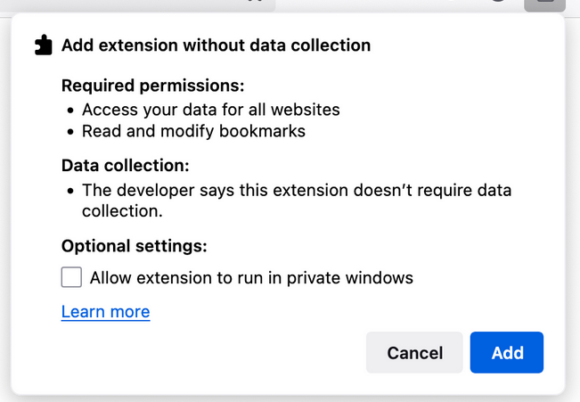

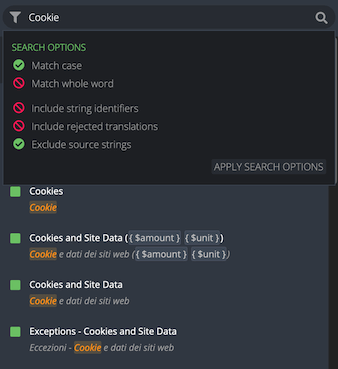

My first major project had me improving Pontoon’s search capabilities. Despite the many filters Pontoon already contained to sift through over 4.5 million strings, there were still no options for common filters like ‘Match Case’ or to limit a search to specific elements, like source text. My job was to create a new full-stack feature to enable users to refine their search queries. By leveraging TypeScript, React, and Django’s ORM capabilities, I created a new search panel with 5 options for users to toggle:

Improving the searching in Pontoon not only made the user experience more streamlined, but also improved Pontoon’s API capabilities, which was later used in the Mozilla Language Portal (see below).

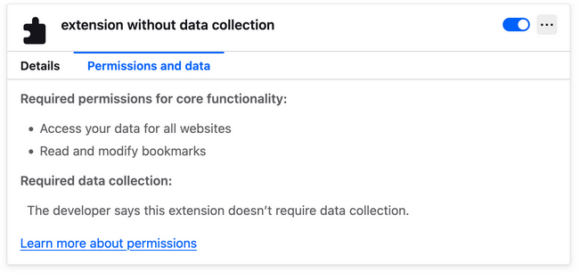

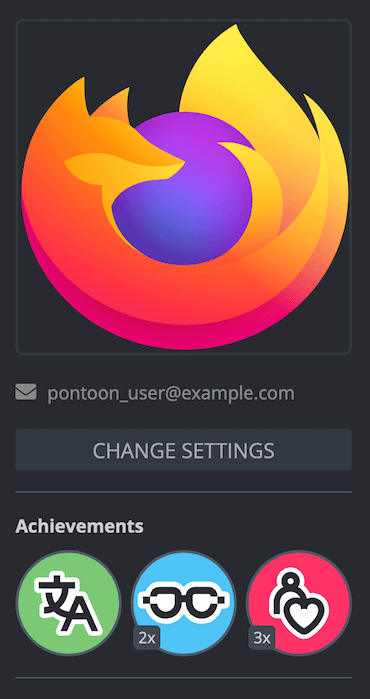

Pontoon Achievement Badges

My second major project involved adding gamification elements into Pontoon. In a nutshell, we wanted to implement achievement badges into Pontoon to recognize contributions made by our vibrant volunteer community, while also further promoting positive behaviours on the platform. Ayanaa had created both the proposal document and technical specification before her term ended, so it was my job to implement the feature. This project mainly involved TypeScript and a bit of Django for counting badge actions, and the initial user feedback was overwhelmingly positive! For more information, check out the blog post I wrote to announce the feature.

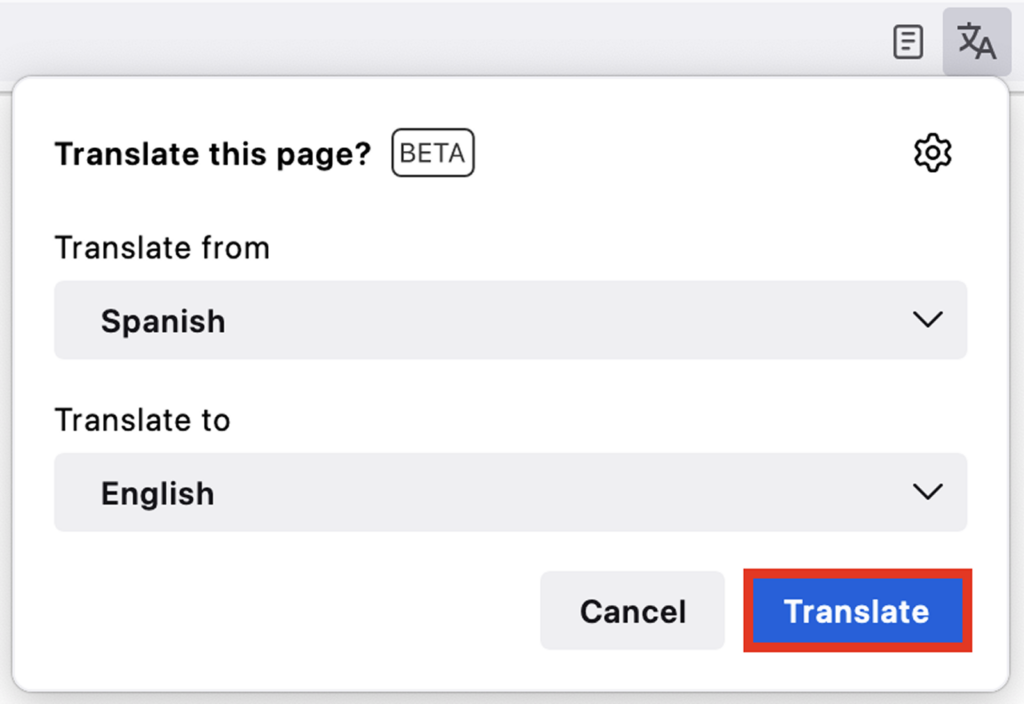

Mozilla Language Portal

My final project, and the one I had the most ownership over, was the creation of the Mozilla Language Portal. For a long time, the localization industry was missing a central hub for sharing knowledge, best practices, and searchable translation memories. We decided it was a good idea to leverage our influence to create the Mozilla Language Portal, in hopes to fill this gap and make localization tools themselves more accessible. We decided to create the Portal using Birdbox, an internal tool created by the websites team to quickly spin up Mozilla-branded web pages. The deployment of the Portal was handled primarily through Google Cloud Services and Terraform, which was a whole new set of tools for me to learn. The website itself was made using Wagtail CMS, built on top of Django. With the help of the Websites and Site Reliability Engineering teams, I was able to both create the MVP and deploy the site.

Closing Thoughts

Since taking an anthropology course in my third year of university, I’ve come to appreciate how important human connection and social interactions are, especially in this day and age. Most people would agree that technology (in particular the internet) has now thoroughly integrated itself into the fabric of our societies, so I believe it’s in our collective best interest to keep the internet in a healthy and open state. In recent years, it sadly seems like many bad actors are increasing their influence and control over what should be a vital and protected resource. As one of my long-term goals, I want to focus my career towards improving the internet and using its influence over society for good.

So naturally with this goal in mind, Mozilla’s position as a non-profit organization dedicated to creating an open and accessible web was a perfect fit for me. Coincidentally, Localization was also the perfect team for me. As a very community-facing team, Localization gave me the unique chance to see the direct results of creating technology to make the internet more accessible, and I was able to explore my burning interests such as web standards.

I think it goes without saying that the lessons I learned at Mozilla, both from an engineering perspective and from a community perspective, will stick with me for the rest of my career. Regardless of if I continue to be a SWE in the future, I want to focus on creating technology to grow and help humanity, and thus I’ve promised myself to only work for organizations whose missions I align with.

To me, my time at Mozilla will always be emblematic of my growth: as a student, as an engineer, and as an individual. They say all good things must come to an end, but I oddly don’t feel as though my time at Mozilla is coming to an end. The lessons instilled in me and the drive to keep fighting for an open web won’t ever leave me.

Team photo with everyone! Taken in August 2024

Acknowledgements

I’d like to dedicate this section to my amazing team that has supported me and helped me grow both professionally and personally this past year.

To Ayanaa, thank you for being a great coworker, mentor and friend. I’ve been following the path you carved out, both at Mozilla and beyond, and I’m extremely grateful for all the advice and support you gave me throughout.

To Matjaž, I can’t really put into words how helpful and kind you have been to me. You truly have a talent for mentoring, and I’m so incredibly grateful you were my mentor. I hope you continue to inspire others the way you’ve inspired me. Let’s hope Lebron and Luka can win it all (eventually).

To Flod, your support as my manager has been monumental to my professional development. Thank you for being patient with me, and for supporting all of my interests and endeavors during my term. It sounds cliché, but I truly couldn’t have asked for a better manager.

To Eemeli, thank you for supporting my interest in MessageFormat2. Your great sense of humour will definitely stick with me, and you’ve inspired me to carry on your tradition of taking walks during online meetings.

To Bryan, it was always such a pleasure to speak and work with you. I’m glad I had someone else to nerd-out with about Pokémon! I really appreciate how we could always find something to talk about.

To Peiying, I loved hearing all about your travel anecdotes during MozWeek and our Work Week. I promise to keep my photo blog updated as long as you do too! I hope to see you and Leo again soon.

To Delphine, your enthusiasm and bubbly personality always brought a smile to my face. It was so nice to finally have met you during our Work Week! Congrats again on all your personal achievements in this past year.

And thank you to all the Mozillians I’ve had the privilege to work with this past year, both in the Toronto office and across the globe. I’m sure our paths will cross again! As they say, “once a Mozillian, always a Mozillian”.

*Thanks for reading, and if you’d like to learn more or connect with me, please feel free to add me on LinkedIn*

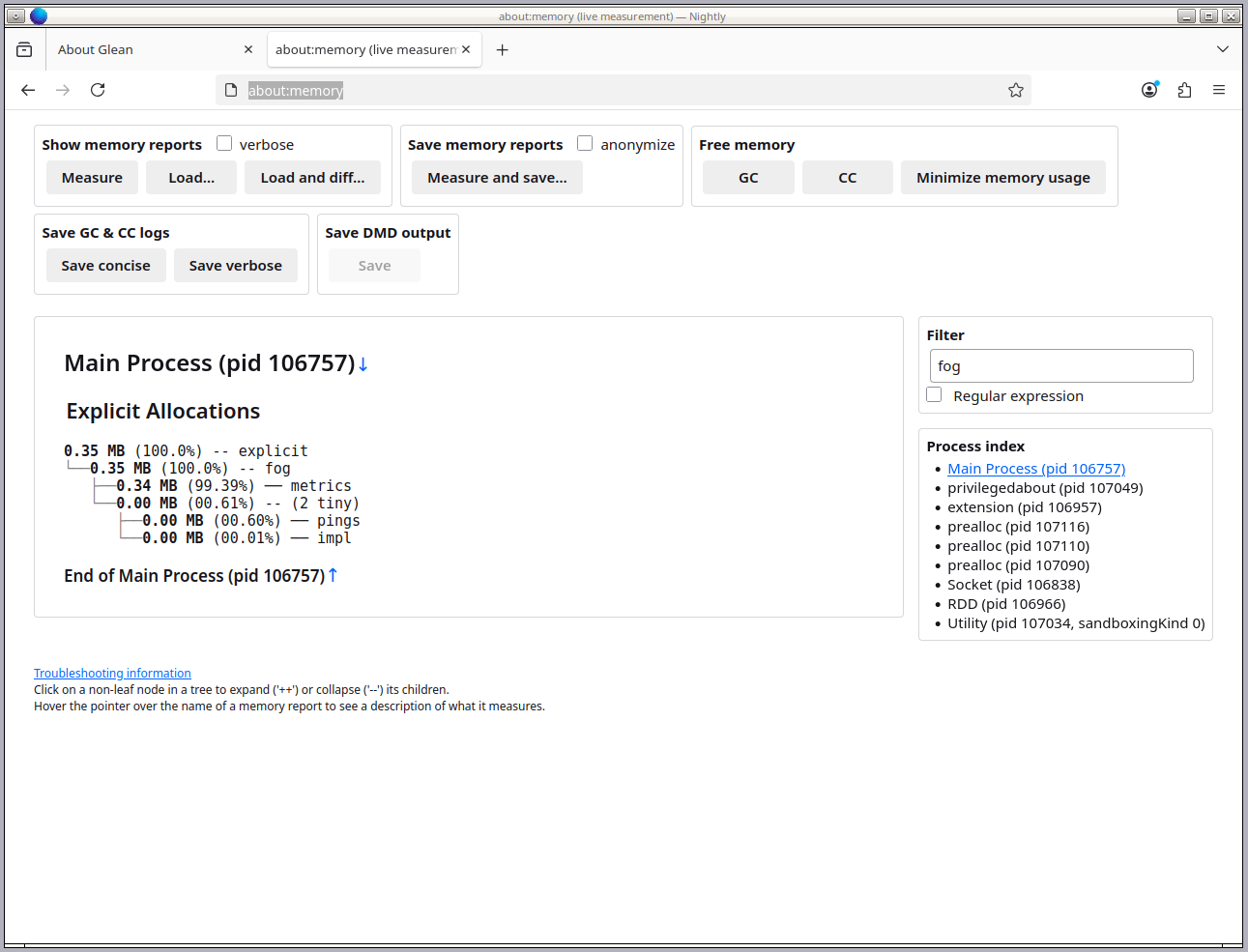

We’ve

We’ve

<figcaption class="wp-element-caption">From Baking With Pride by Janusz Domagala: a lavender sponge cake dressed in buttercream blooms, with a wink to queer-coded history. Credit: The Quarto Group</figcaption>

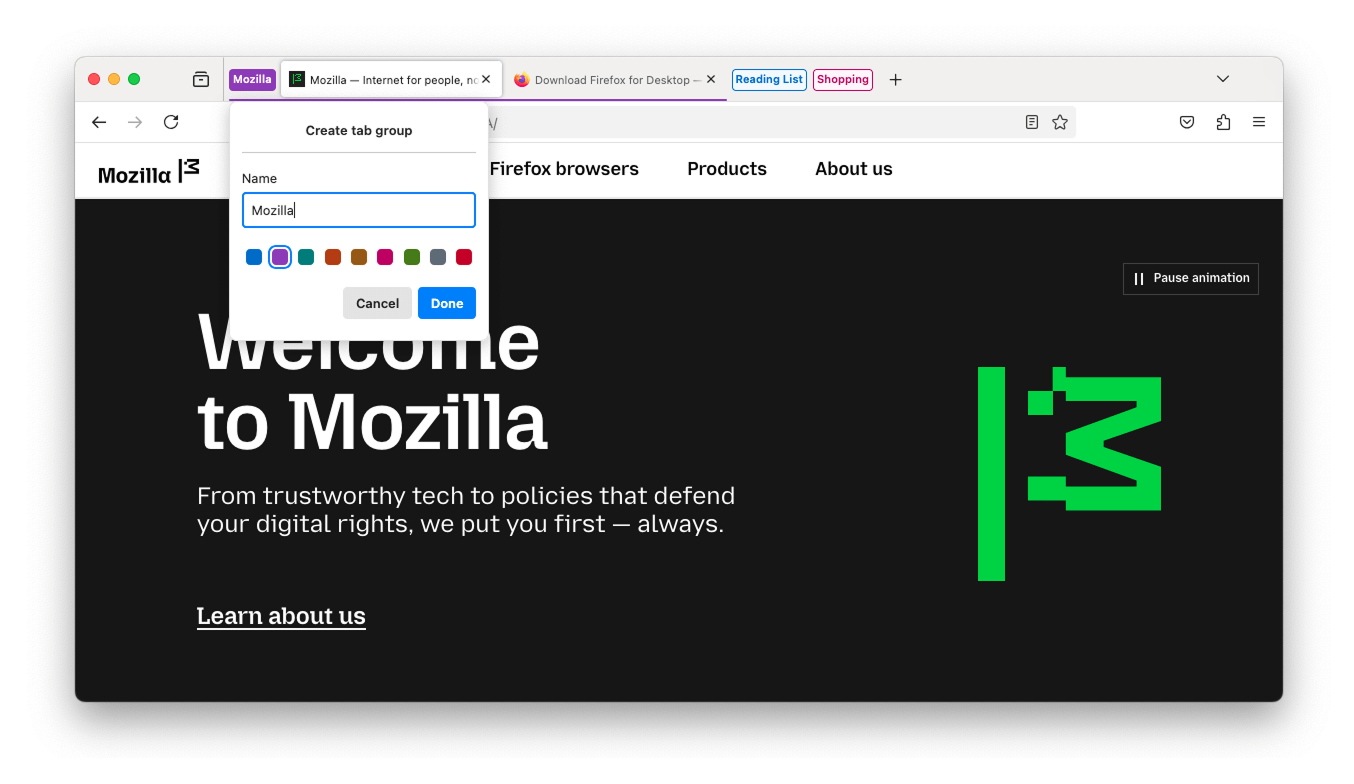

<figcaption class="wp-element-caption">From Baking With Pride by Janusz Domagala: a lavender sponge cake dressed in buttercream blooms, with a wink to queer-coded history. Credit: The Quarto Group</figcaption> <figcaption class="wp-element-caption">Start planning on your laptop, pick it back up on your phone with Firefox sync. </figcaption>

<figcaption class="wp-element-caption">Start planning on your laptop, pick it back up on your phone with Firefox sync. </figcaption>

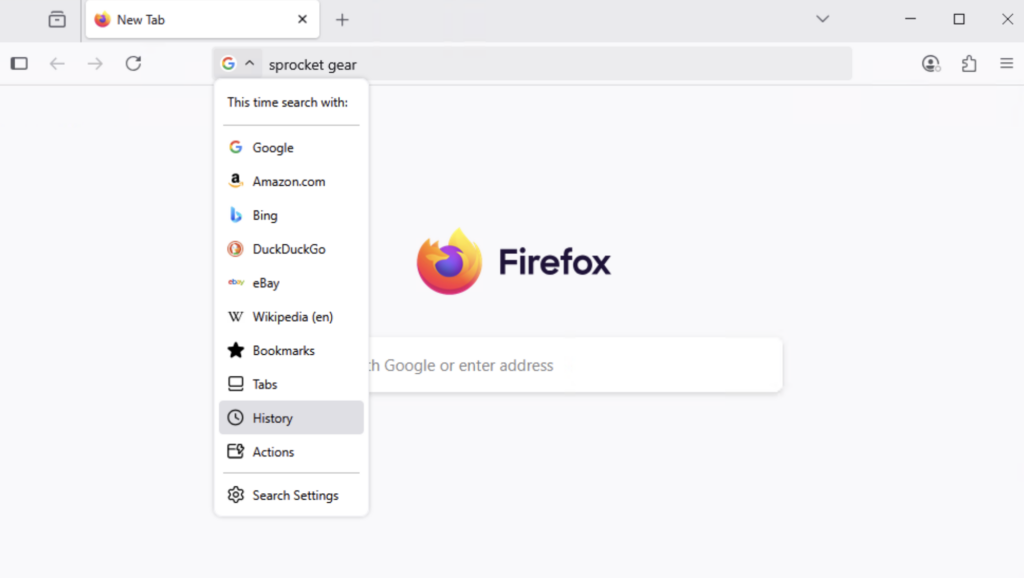

Unified search button

Unified search button

Easily continue your search

Easily continue your search

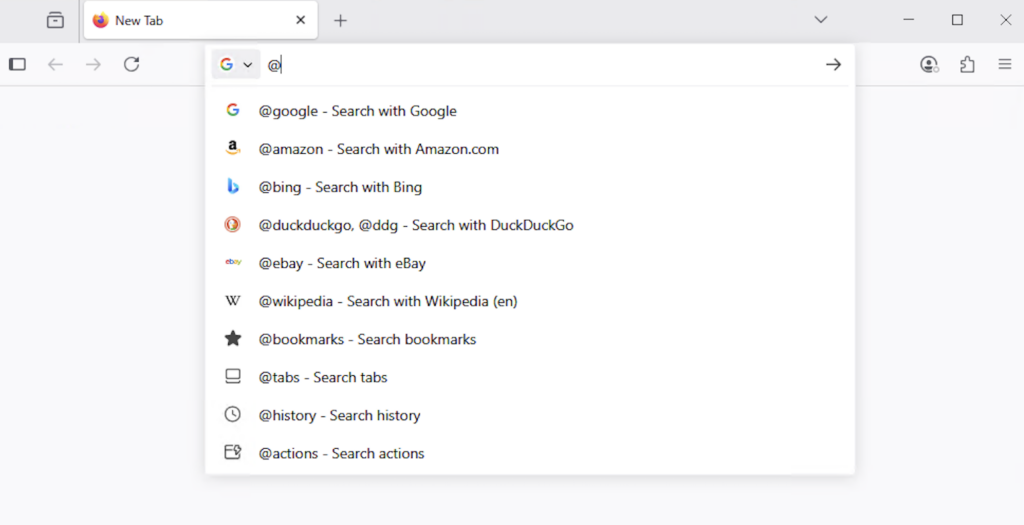

@ Shortcuts

@ Shortcuts

Quick Actions

Quick Actions

Smart shortcuts

Smart shortcuts

HTTPS trim

HTTPS trim

<figcaption>Image via Apple TV+</figcaption>

<figcaption>Image via Apple TV+</figcaption> <figcaption>“Marshmallows are for team players, Dylan.”</figcaption>

<figcaption>“Marshmallows are for team players, Dylan.”</figcaption>